By the middle of the next decade, we will have seen an economic explosion like none in our lifetimes.

The amount of economic productivity we will see, driven by the explosion in "intelligence" is going to baffle almost everyone.

It is hard for us to wrap our minds around what is taking place.

Perhaps this will frame it for everyone:

Jensen Huang, the CEO of NVIDIA, was at a conference by Salesforce. He stated that Moore's Law produces roughly a 100x increase over a decade.

In his estimation, AI is moving at a pace of 100,000x per decade.

Let that sink in.

Moore's Law Squared

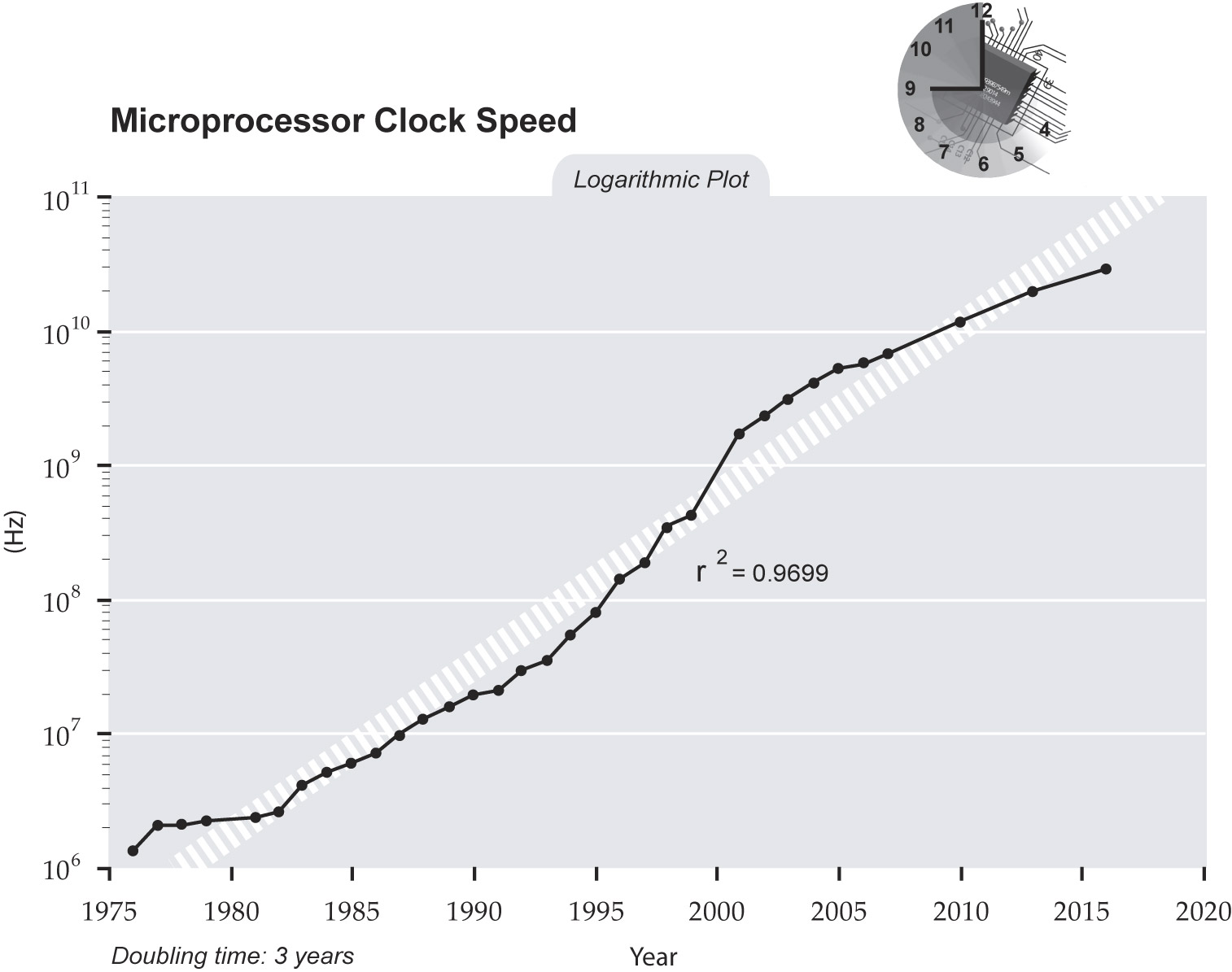

When we look at how the world changed over the last 40 years, it can be attributed to the semiconductor era. This was a time when semiconductors were the driving force.

The digital age owes its growth to the observation that Moore made so many decades ago. Certainly the results, when viewed over a long period of time, are outstanding.

But what about a pace that is 1,000x what we saw in that time period? How do we account for that?

It is a simple fact that most of us cannot even begin to comprehend it. The numbers are just incredible. To garner a full picture brings us into the world of science fiction.

Nevertheless, that is likely to be our reality.

Bits move much faster than atoms. We can see this in how the digital world operates compared to the physical.

What is important here is the pace. Within a year, we will likely have AI agents that basically are automated skills. We will have millions (eventually tens of billions) that are built on top of tools. These can be applied to any online activity.

Consider the economic implications of that.

Whatever needs to be done in the digital realm can be handled by an agent.

For example, what happens when an AI agent can summarize all emails an executive receives and generates a reply? How much times does that save?

Keep in mind, according to Huang, we are going 1,000 times faster than the pace for classical computing. With generative AI, we are actually looking at a new form of computing.

Here is a basic plot, on a logarithmic chart, of compute:

Source

While we are dealing with a different metric, consider the advanced pace we are dealing with.

Economic Explosion

Compute translates into economic output. This is the basic equation going forward. As we move into AI agents, robots, and spatial intelligence, things are going to massive shift.

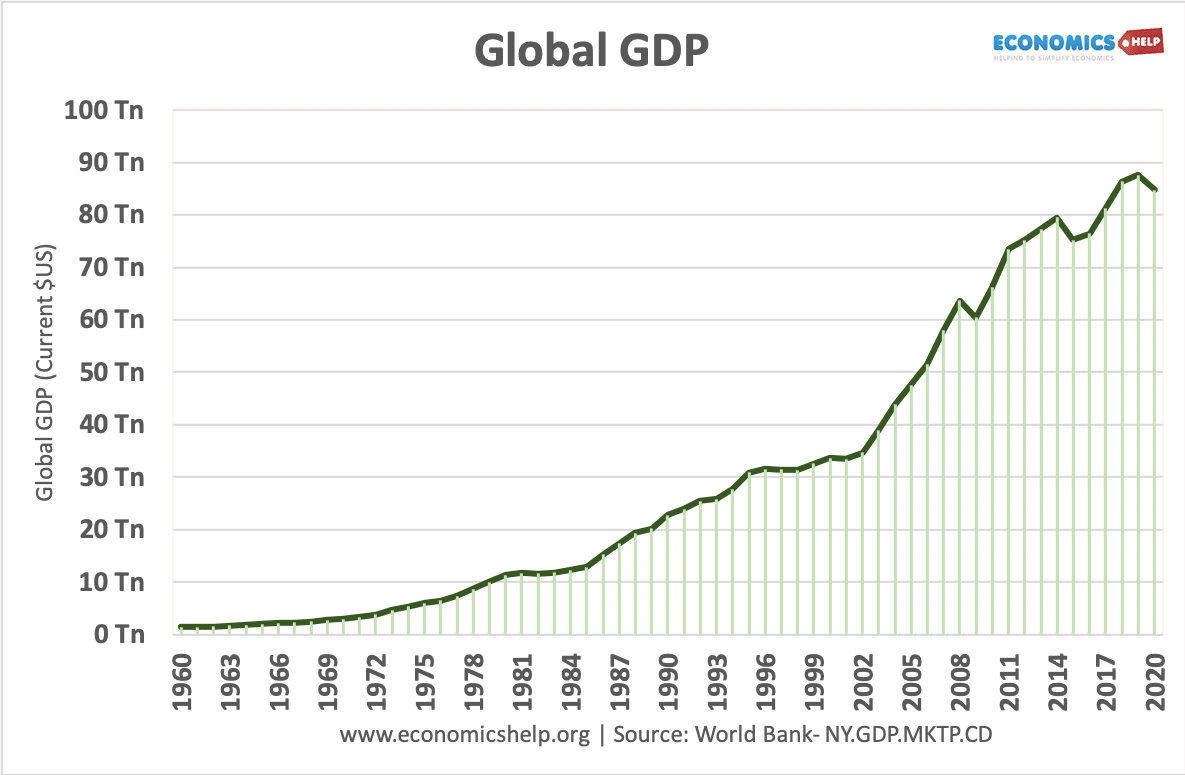

To give us an idea, here is a chart of global GDP. Notice the impact of compute on this:

Will we get another economic singularity due to the shift in technology?

To achieve this, we will need somewhere around 30% annual growth. That is more than 10x the present growth rate.

It seems unlikely until you look at the first chart and picture a 1,000x change in the pace. This is certainly going to have a massive impact upon the second chart.

Of course, as I stated on a number of occasions, GDP is an awful metric. In this technological era, it is so outdated it is ridiculous. However, we do not have anything else to replace it, hence we are stuck with it.

That said, we still are going to see a massive shift in the slope of the second chart. By 2035, we will have a much higher growth rate due to AI advancement. On top of that, I predict the a full economic singularity might be arrived at in the 2040s.

The next decade will lay the foundation for what is going to take place after.

When it comes to AI, if this is a 100 story building, we are on the second floor.

Posted Using InLeo Alpha